Keep in mind that this request doesn’t tell you if they are under under high pressure, if too much CPU or memory are consumed.

This request will return to you if the metadatabase as well as the scheduler are healthy. Well you can do this with the following request: curl -verbose ' -H 'content-type: application/json' -user "airflow:airflow" For example, you might want to check if your Airflow scheduler is healthy before triggering a DAG.

I think you agree with me that monitoring your Airflow instance is important. Here you “patch” the DAG to pause or unpause it. Notice that there is no specific endpoints like with the experimental API in the new Airflow REST API. This request lists of all your DAGs and you should obtain a similar json output as shown below: ' ' -H 'content-type: application/json' -user "airflow:airflow" Once Airflow is up and running you can execute the following request with curl: curl -verbose ' -H 'content-type: application/json' -user "airflow:airflow"

Airflow api password#

By default, with the official docker-compose file of Airflow, a user admin with the password admin is created. Send your first requestįirst, make sure your auth_backend setting is defined to “.basic_auth”. That will help you to truly understand how they work.

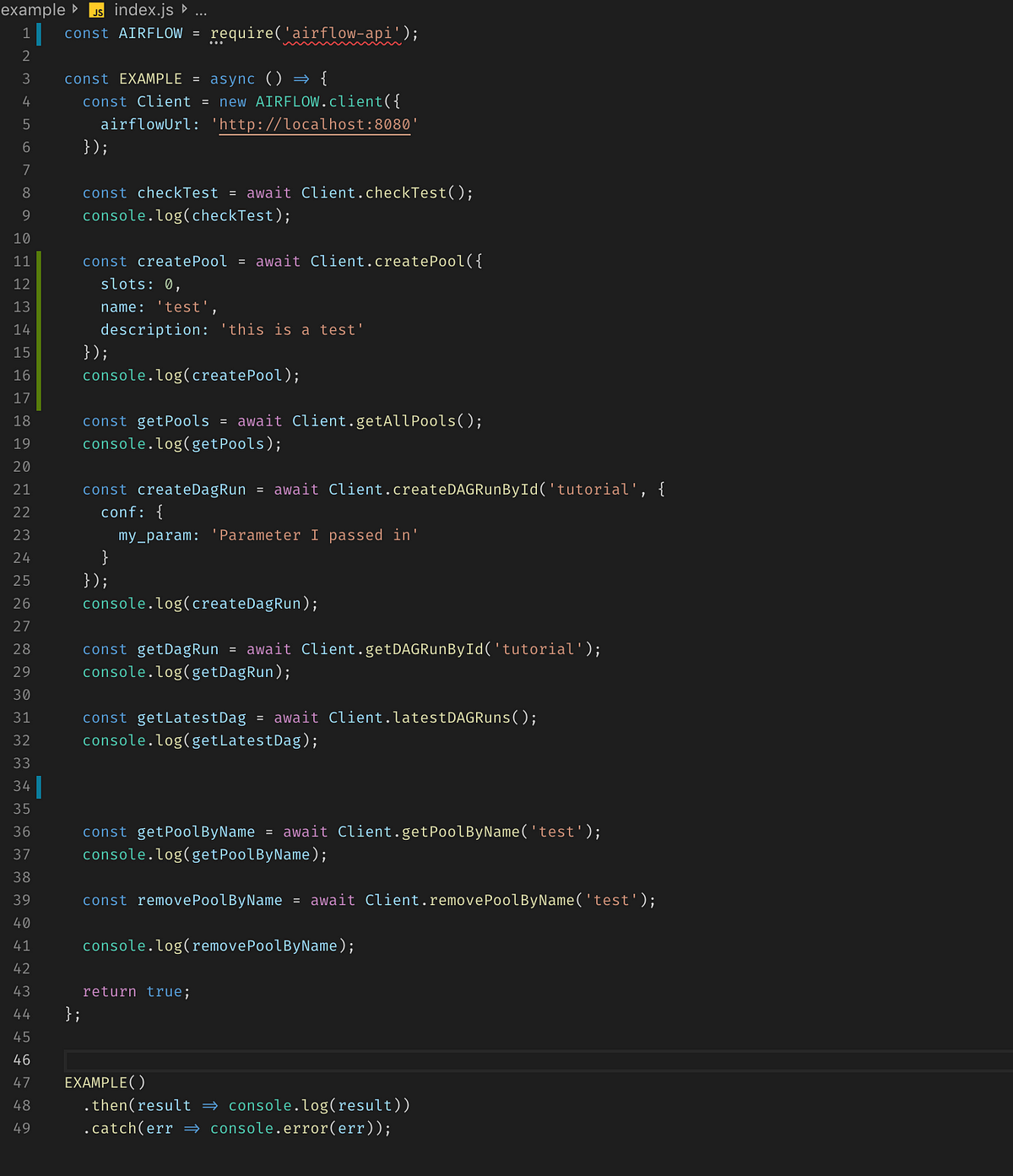

Airflow api code#

That being said, I strongly advise you to take a look at the source code of these different authentication backends. If you want to learn more about this check the documentation here. Yes, you can create your own backend! That’s the beauty of Airflow, you can customize it as much as you need. The username and password must be base64 encoded and sent through the HTTP header. Define the auth_backend to “.basic_auth”.

The great thing is that it works either with users created through LDAP or within Airflow DB.

Airflow api how to#

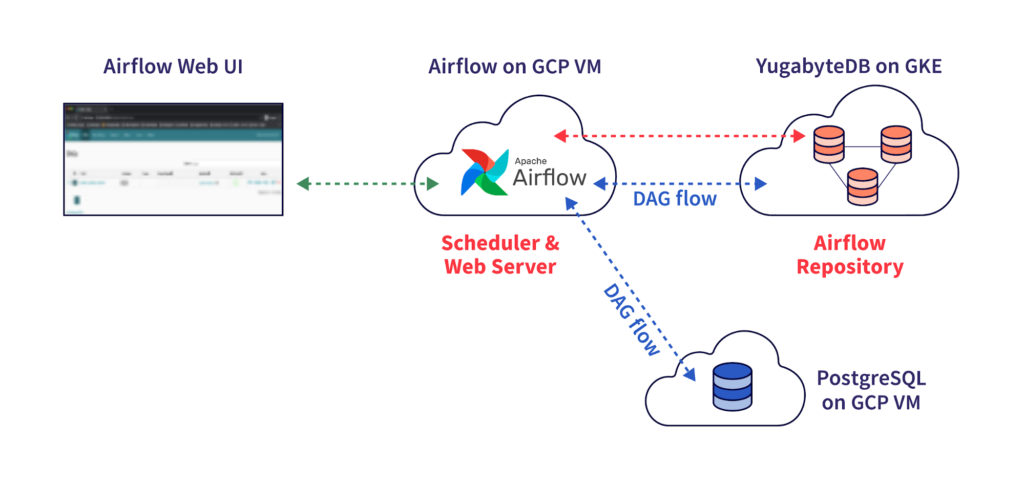

Auth_backend defines how to authenticate users of the API. To get started with the API, you have to know a very important configuration setting called AUTH_BACKEND. Then, check the video I made right there, you will learn how to set up Airflow Airflow with Docker in only 5 mins. You need to have Docker and Docker compose installed on your computer. It is well documented thanks to a beautiful Swagger interface and includes different ways to authorise clients.Īll right, enough of theory, let’s move to the practice 🤓 Get started with the Airflow REST APIĪt this point, I strongly advise to try what I’m going to show you on your computer. Most of the endpoints are CRUD (Create, read, update, delete) operations to fully control Airflow resources. To sum up, this new version makes for easy access by third-party tools and follows an industry standard interface with OpenAPI 3. Ultimately, the API allows you to perform most of the operations that are available through the UI, the experimental API and the CLI ( used by typical users, cf: you can’t change the configuration of Airflow from the API)

0 kommentar(er)

0 kommentar(er)